![]() Raenelle Manning is an IPilogue Writer and a 2L JD Candidate at Osgoode Hall Law School.

Raenelle Manning is an IPilogue Writer and a 2L JD Candidate at Osgoode Hall Law School.

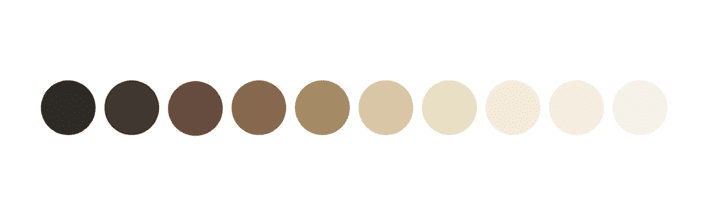

On May 11th, 2022, Google unveiled the Monk Skin Tone (MST) Scale, a 10-point scale designed to increase skin tone representation in image-based machine learning and artificial intelligence (AI) systems. The MST Scale is expected to be a more inclusive alternative to the current tech industry’s standard, 6-point Fitzpatrick Scale, which has generally underrepresented people with darker skin tones.

This representative scale is the result of a collaboration between the Google Research Center for Responsible AI and Human Centred Technology (RAI-HCT) and Dr. Ellis Monk, a Harvard professor and sociologist, who leveraged his extensive research on racial inequality and colourism. By developing this scale, Monk hoped to disassociate race from skin tone in view of the fact that racial and ethnic groups often include a spectrum of skin tones. According to Monk, “A lot of the time people feel that they are lumped together into racial categories: the Black category, White category, the Asian category, etc., but in this there’s all this difference. You need a much more fine-grain complex understanding that will really do justice to this distinction between a broad racial category and all these phenotypic differences across these categories”.

Along with other Google products, the MST Scale will be implemented into the search engine on Google Images. Google’s search field will include a feature that allows users to refine their results by skin tone. For example, beauty-related searches like, “prom make up looks” can be filtered to produce images of people with the selected skin shade. Google also intends to create a standardized method of labelling web content with attributes like skin tone, hair texture and hair colour to increase representation.

Photo of Google’s 10 Shades of the Monk Skin Tone Scale

Skin Tone Equity in Technology

Computer vison is a type of AI that allows computers to “see and understand images”, but when these systems are not intentionally built to include a spectrum of skins tones, they have been found to perform poorly on darker skin. Dr. Courtney Heldreth, a core researcher on the RAI-HCT team states that “skin tone plays a significant role in how people are treated …and one example of colorism is when technology doesn’t see skin tone accurately, potentially exacerbating existing inequities”.

The issue of colour-biased technology has been previously raised in relation to facial recognition algorithms. A facial recognition system is another domain of computer vision that uses AI and machine learning to identify human faces in digital images.

As a MIT student, Joy Buolamwini, a dark-skinned black woman, noticed that some facial recognition systems could not identify her face, until she put on a white mask. In an effort to advocate for “algorithmic accountability”, she published an empirical study entitled, “Gender Shades” in 2018. The study assesses the facial analysis software used by Microsoft, IBM and Face ++. The objective of the study was to determine how well these systems could identify the gender of people with various skin tones. She tested each company’s systems using a personally developed data set of 1270 faces of people with light and dark skin tones. The faces were comprised of notable male and female parliamentarians from three African nations and three Nordic nations. The subjects’ skin tones were labelled using the six-point Fitzpatrick scale. The results revealed that “darker-skinned females are the most misclassified group with error rates of up 34.7%, while the error rate for lighter skinned males is 0.8%.”

The study emphasizes that AI software is only as smart as the data sets used to train it. The central issue was that the data sets used to develop the facial analysis software did not include a broad range of skin shades. Even if the data set was more inclusive, the Fitzpatrick scale would not represent those various darker shades. Since this study, there have been improvements to the accuracy of these company’s products. Microsoft claims to have taken a more serious approach to addressing AI bias in their products.

Monk Skin Tone Scale Hopes to Improve Technology

There are serious implications to using computer vision systems that are not suitable to perform on darker skin tones. For example, the facial recognition software employed by law enforcement in the United States have been found to disproportionately misidentify African-Americans as suspected criminals. This is because they are underrepresented in the datasets used to develop the software, but overrepresented in mugshot databases. Resultingly, these biased AI systems have the potential to exacerbate racial disparities in criminal justice system and threaten civil liberties.

By making the MST Scale publicly available for research and product development, Google is hoping that the MST Scale will help the tech industry build systems that work better for people of all skin tones by creating representative data sets for training and evaluating AI models for fairness. As our society becomes increasingly dependent on AI processes, it is important that these technologies are developed responsibly and with inclusivity and diversity in mind.